Compute ‘Internal Page Rank’

⚠️ THIS IS A WORK IN PROGRESS

Rcrawler(Website = "https://www.rforseo.com", NetworkData = TRUE)View(NetwEdges)

Last updated

⚠️ THIS IS A WORK IN PROGRESS

Rcrawler(Website = "https://www.rforseo.com", NetworkData = TRUE)View(NetwEdges)

Last updated

library(dplyr)

links <- NetwEdges[,1:2] %>%

#grabing the first two columns

distinct()

# loading igraph package

library(igraph)

# Loading website internal links inside a graph object

g <- graph.data.frame(links)

# this is the main function, don't ask how it works

pr <- page.rank(g, algo = "prpack", vids = V(g), directed = TRUE, damping = 0.85)

# grabing result inside a dedicated data frame

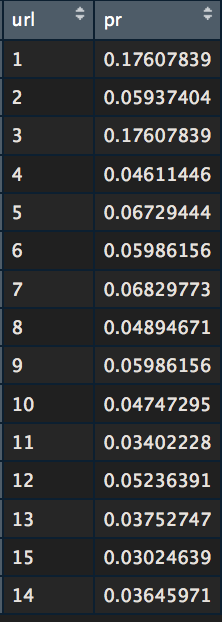

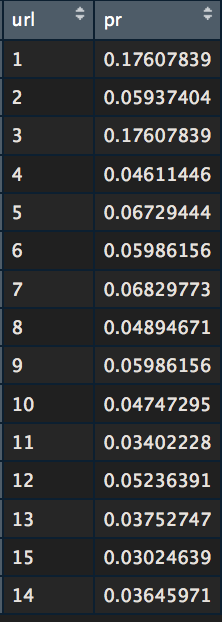

values <- data.frame(pr$vector)

values$names <- rownames(values)

# delating row names

row.names(values) <- NULL

# reordering column

values <- values[c(2,1)]

# renaming columns

names(values)[1] <- "url"

names(values)[2] <- "pr"

View(values)#replacing id with url

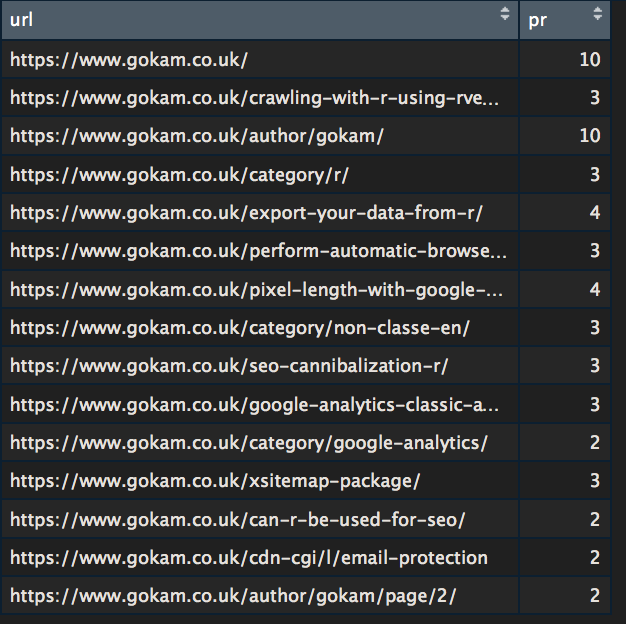

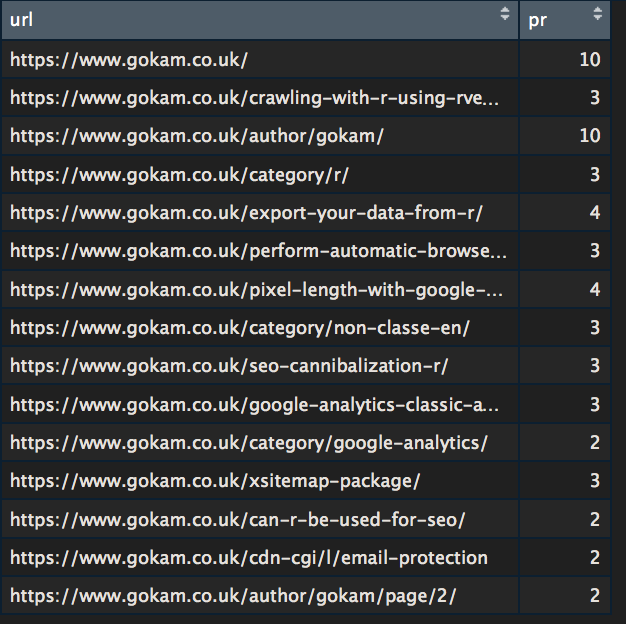

values$url <- NetwIndex

# out of 10

values$pr <- round(values$pr / max(values$pr) * 10)

#display

View(values)