Send requests to the Google Indexing API using googleAuthR

This guide has been written by Ruben Vezzoli in July 2020. Ruben is a 25 years old , Italian, Data Analyst checkout his website with other interesting R scripts.

Two years ago, Google introduced the Indexing API with the intent of solving an issue that affected jobs/streaming websites – having outdated content in the index. The Google Developers documentation says:

“You can use the Indexing API to tell Google to update or remove pages from the Google index. The requests must specify the location of a web page. You can also get the status of notifications that you have sent to Google. Currently, the Indexing API can only be used to crawl pages with either job posting or livestream structured data.”

Many SEOs are using Indexing API also for non-job-related websites and that’s why I decided to build an R script to try out the API (and it worked).

I’m going to show you how the script works, but don’t forget that there is a free quota of 200 URLs sent per day!

Create the Indexing API Credentials

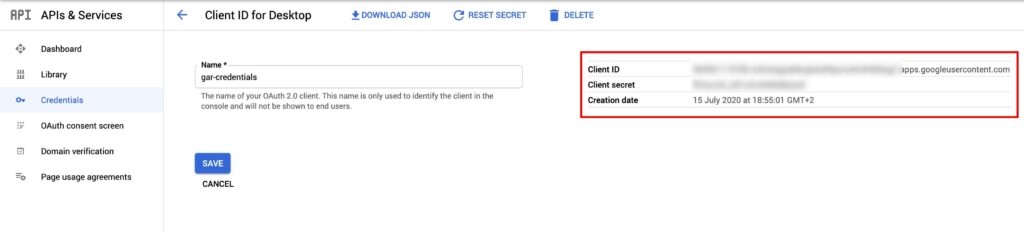

First of all, you have to generate the client id and client secret keys for the APIs.

Open the Google API Console and go to the API Library.

Open the Indexing API page and enable the API. Then go to the Credentials page and there you’ll find your credentials.

googleAuthR package & Indexing API options

I created the script using the googleAuthR package, which allows you to send requests to Google APIs.

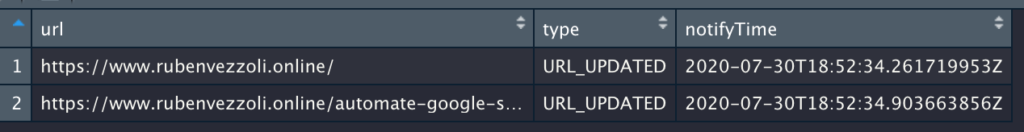

The code takes in input, a character vector of maximum 200 URLs and returns in a data frame the response of the API (see the screenshot below).

You can use the script to update or delete pages. You just have to change the line 38:

Use type = “URL_UPDATED” if you have to update the page

Use type = “URL_DELETED” if you have to remove the page

Last updated