Crawling with rvest

If you want to crawl a couple of URLs for SEO purposes, there are many ways to do it but one of the most reliable and versatile packages you can use is the rvest package.

Here is a simple demo from the package documentation using the IMDb website:

# Package installation, instruction to be run only once

install.packages("rvest")

# Loading rvest

packagelibrary(rvest)The first step is to crawl the URL and store the webpage inside a ‘lego_movie’ variable.

lego_movie <- read_html("http://www.imdb.com/title/tt1490017/")Quite straightforward, isn’t it?

lego_move is an xml_document that need to be parse in order to extract the data. Here is how to do it:

rating <- lego_movie %>%

html_nodes("strong span") %>%

html_text() %>%

as.numeric()For those who don’t know %>% operator here is simple explanation html_nodes() function will extract from our webpage, HTML tags that match CSS style query selector. In this case, we are looking for a <span> tag whose parent is a <strong> tag. then script will extract the inner text value using html_text() then convert it to a number using as.numeric().

Finally, it will store this value inside rating variable to display the value just write:

rating

# it should display > [1] 7.8Let’s take another example. This time we are going to grab the movies’ cast. Having a look at the HTML DOM, it seems that we need to grab an HTML <img> tag who’s parent tag have ‘titleCast’ as an id and ‘primary_photo’ as a class name and then we’ll need to extract the alt attribute

Last example, we want the movie poster url. First step is to grab <img> tag who’s parent have a class name ‘poster’ Then extract src attribute and display it

Now a real-life crawl example

Now that we’ve seen an example by the book. We’ll switch to something more useful and a little bit more complex. Using the following tutorial, you’ll be able to extract the review score of any WordPress plugins over time.

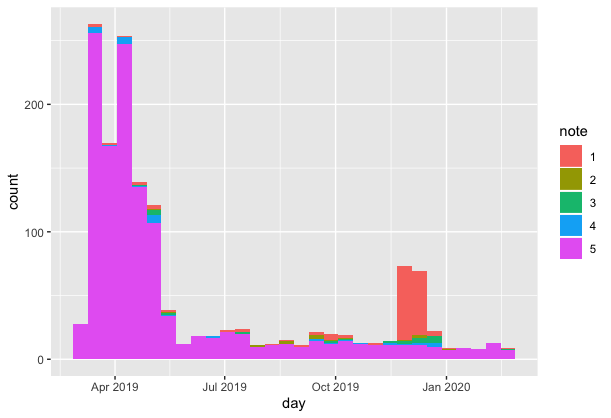

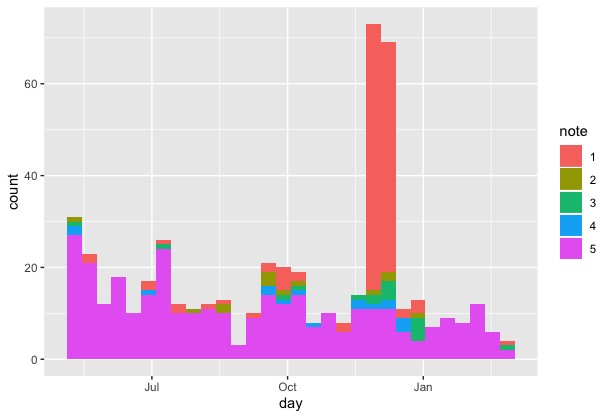

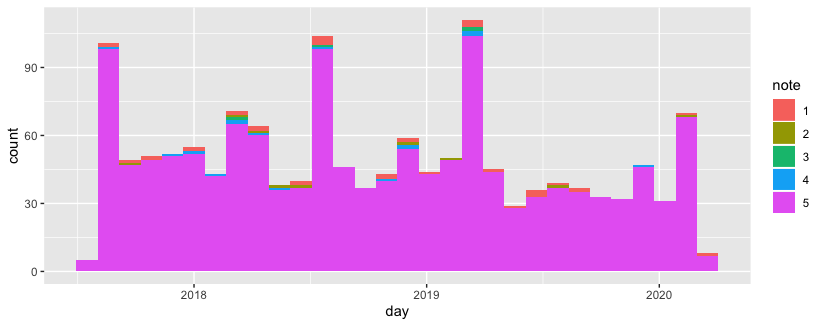

For example here are the stats for Yoast, the famous SEO plugin:

Here are the ones’ for All in one SEO, his competitor

Very useful to follow if your favourite plugin new release is well received or not.

But before that, a little warning, the source code I’m about to show you has been made by me. It’s full of flaws, couple of stack overflow copypasta but… it works. 😅 So Dear practitioners please don’t judge me It’s one of the beauties of R, you get your ends relatively easily.

(but I gladly accept any ideas to make this code easier for beginner, don’t hesitate to contact me)

So let’s get to it, the first step is to grab a reviews page URL. On this one, we have 49 pages of reviews.

We’ll have to make a loop to run into each pagination. Another problem is that no dates are being displayed but only durations, so we’ll have to convert them.

As usual, we’ll first load the necessary packages. If there are not installed yet, run the install.packages() function as seen before.

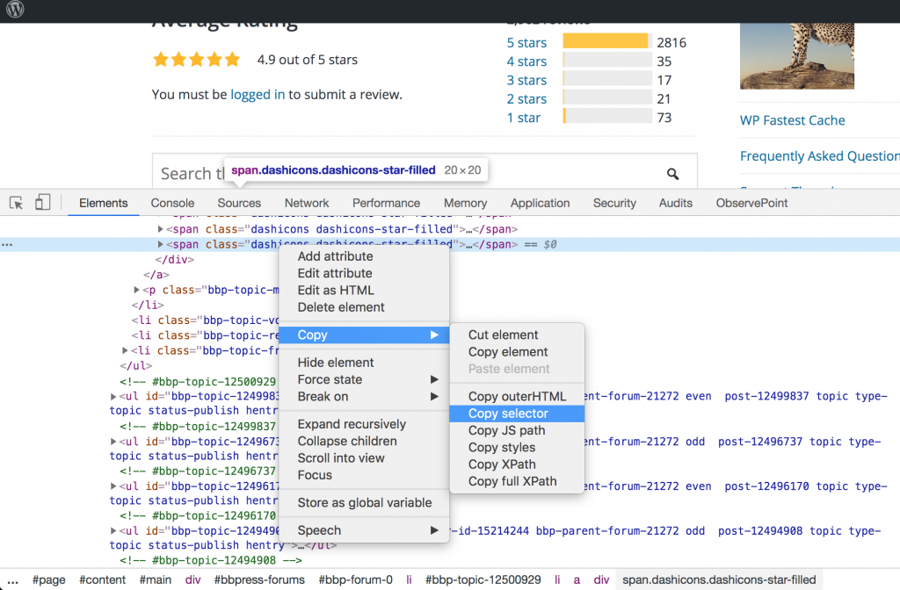

If you need help to select elements, chrome inspector is great. You can copy/paste xpath and .css style selector directly:

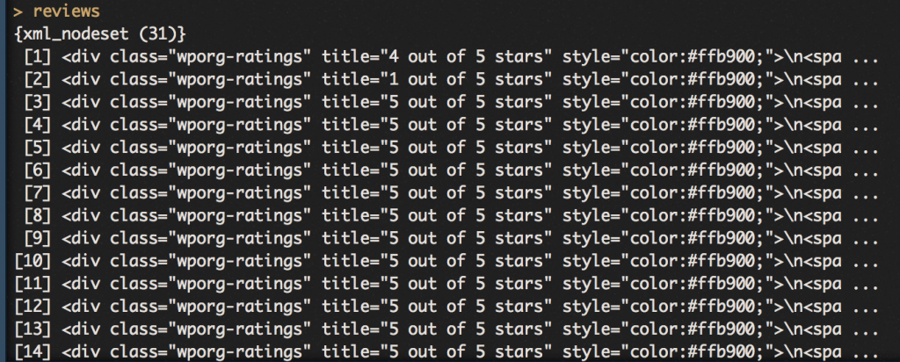

In other words, it transforms this HTML data hard to deal with

into a clean data frame with nice columns

The next step is to convert these durations into days. It’s going to be quick:

the data is now ready, export your data or make a small graph to display it using ggplot package

Last updated